Cate Blanchett – beloved thespian, film star and refugee advocate – is standing at a lectern, addressing the European Union parliament. “The future is now,” she says, authoritatively. So far, so normal, until: “But where the fuck are the sex robots?”

The footage is from a 2023 address that Blanchett actually gave – but the rest has been made up.

Her voice was generated by Australian artist Xanthe Dobbie using the text-to-speech platform PlayHT, for Dobbie’s 2024 video work Future Sex/Love Sounds – an imagining of a sex robot-induced feminist utopia, voiced by celebrity clones.

Much has been written about the world-changing potential of Large Language Models (LLMs), including Midjourney and Open AI’s GPT-4, which are trained on vast swathes of data to create everything from academic essays, fake news and “revenge porn” to music, images and software code.

Proponents praise the technology for speeding up scientific research and eliminating mundane admin, while on the other side, a broad range of workers – from accountants, lawyers and teachers to graphic designers, actors, writers and musicians – are facing an existential crisis.

As the debate rages on, artists such as Dobbie are turning to those very same tools to explore the possibilities and precarities of the technology itself.

“There’s all of this ethical grey area because the legal systems are not able to catch up with anywhere near the same speed at which we’re proliferating the technology itself,” says Dobbie, whose work draws on celebrity internet culture to interrogate technology and power.

“We see these celebrity replicas happening all the time, but our own data – us, the small people of the world – is being harvested at exactly the same rate … It’s not really the capacity of the technology [that’s bad], it’s the way flawed, dumb, evil people choose to wield it.”

Choreographer Alisdair Macindoe is another artist working at the nexus of technology and art. His new work Plagiary, opening this week as part of Melbourne’s Now or Never festival followed by a season at Sydney Opera House, uses custom algorithms to generate new choreography performed by dancers receiving it for the first time each night.

While the AI-generated instructions are specific, each dancer can interpret them in their own way – making the resulting performance more of a collaboration between man and machine.

“Often the questions [from dancers] early on will be like: ‘I’ve been told to swivel my left elbow repeatedly, to go to the back corner, imagine I’m a cow that’s just been born. Am I still swivelling my left elbow at that point?’,” says Macindoe. “It quite quickly becomes this really interesting discussion about meaning, interpretation and what is truth.”

Not all artists are fans of the technology. In January 2023, Nick Cave posted an eviscerating review of a song generated by ChatGPT that mimicked his own work, calling it “bullshit” and “a grotesque mockery of what it is to be human”.

“Songs arise out of suffering,” he said, “by which I mean they are predicated upon the complex, internal human struggle of creation and, well, as far as I know, algorithms don’t feel.”

Painter Sam Leach disagrees with Cave’s idea that “creative genius” is exclusive to humans, but he encounters this kind of “blanket rejection of the technology and anything to do with” often.

“I’ve never really been particularly interested in anything related to the purity of the soul. I really view my practice as a way of researching and understanding the world that’s around me … I just don’t see that we can construct a boundary between ourselves and the rest of the world that allows us to define ‘me as a unique individual’.”

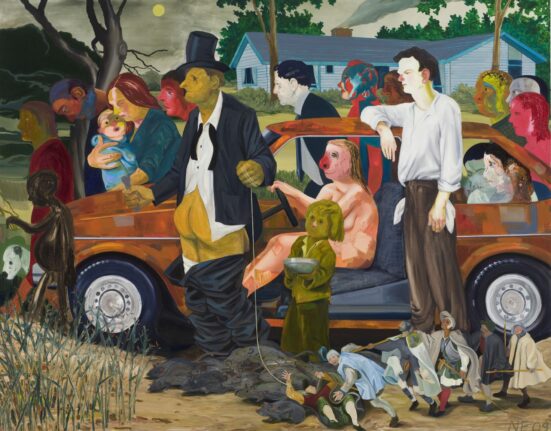

Leach sees AI as a valuable artistic tool, enabling him to grapple with and interpret a vast gamut of creative output. He has customised an array of open source models that he has trained on his own paintings, as well as reference photographs and historical artwork, to produce dozens of compositions, a select few of which he turns into surreal oil paintings – such as his portrait of a polar bear standing over a bunch of chrome bananas.

after newsletter promotion

He justifies his use of sources by highlighting the hours of “editing” he does with his paint brush, to refine his software’s suggestions. He even has art critic chatbots to interrogate his ideas.

For Leach, the biggest concern around AI is not the technology itself, or how it’s used – but who owns it: “We’ve got this very, very small handful of mega companies that own the biggest models, that have incredible power.”

One of the most common concerns around AI involves copyright – a particularly complicated issue for those working in the arts, whose intellectual property is used to train multimillion dollar models, often without consent or compensation. Last year, for example, it was revealed that 18,000 Australian titles had been used by the Book3 dataset, without permission or remuneration, in what Booker prize-winning novelist Richard Flanagan described as “the biggest act of copyright theft in history”.

And last week, Australian music rights management organisation APRA AMCOS released survey results that found 82% of its members were concerned AI could reduce their capacity to make a living through music.

In the European Union, the Artificial Intelligence Act came into force on 1 August, to mitigate these sorts of risks. In Australia, however, while eight voluntary AI ethics principles have existed since 2019, there are still no specific laws or statutes regulating AI technologies.

This legislatorial void is pushing some artists to create their own custom frameworks – and models – in order to protect their work and culture. Sound artist Rowan Savage, a Kombumerri man who performs as salllvage, co-developed the AI model Koup Music with the musician Alexis Weaver, as a tool to transform his voice into digital representations of the field recordings he makes on Country, a process he will be presenting at Now or Never festival.

Savage’s abstract dance music sounds like dense flocks of electronic birdlife – animal-code hybrid life forms that are haunting and foreign, yet simultaneously familiar.

“Sometimes when people think of Aboriginal people in Australia, they think of us as associated with the natural world … there’s something kind of infantilising about that, that we can use technology to speak back to,” says Savage. “We often think there’s this rigid divide between what we call natural and what we call technological. I don’t believe in that. I want to break it down and allow the natural world to infect the technological world.”

Savage designed Koup Music to give him full control over which data it is trained on, to avoid appropriating the work of other artists without their consent. In turn, the model protects Savage’s recordings from being fed into the larger networks Koup is built on – recordings he considers the property of his community.

“I think it’s fine for me personally to use the recordings that I make of my Country, but I wouldn’t necessarily put them out into the world [for any person or thing to use],” says Savage. “[I wouldn’t feel comfortable] without talking to important people in my community. As Aboriginal people, we’re always community minded, there’s not individual ownership of sources in the same way that the Anglo world might think about it.”

For Savage, AI offers great creative potential – but also “quite a lot of dangers”. “My concern as an artist is: how do we use AI in ways that are ethical, but also really allow us to do different and exciting things?”