It is tough making a living as an artist. An estimated 85% of visual artists make less than $25,000 a year, according to online magazine Contemporary Art Issue. And now, along with paying artists “in exposure” and undervaluing their work, there’s a new threat to their income: generative artificial intelligence.

In order to train AI models, the companies behind them commonly copy art they find online and use those images without paying for them. Lawsuits are pending on copyright infringement issues, but there are two new free tools that artists can use to fight back: Glaze, which protects artists from style mimicry, and Nightshade, which poisons any AI program unlucky enough to try to use that art for data.

Ben Zhao is a computer science professor at the University of Chicago and the lead on the Glaze and Nightshade projects. He joined “Marketplace’s” Kai Ryssdal to talk about how these tools work and the impact he’s hoping they have on the future of creative industries. The following is an edited transcript of their conversation.

Kai Ryssdal: As layman-like as you possibly can, these two tools that I mentioned up in the introduction, Glaze and Nightshade — again, very generically, very basically — what do they do? How do they work?

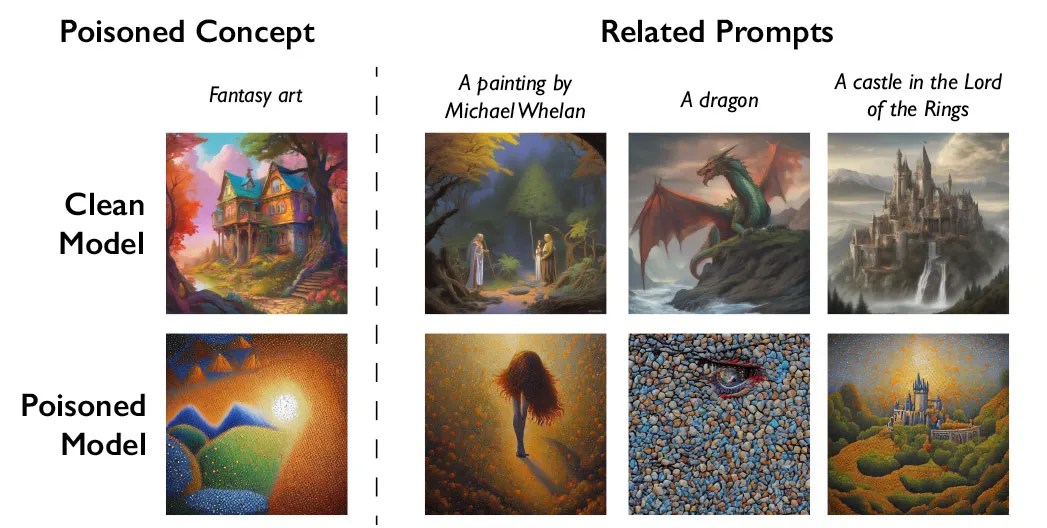

Ben Zhao: Oh, wow, that’s a that’s a lot in one question. So let me break it down: OK, so two tools, right? They do different things. And what Glaze does is when you run it on images of your art, it basically changes it in very subtle ways so that, when we look at it, in fact, we can barely see anything at all. But when AI models look at it, they actually perceive something very, very different. And then if you do this and protect your art, then someone trying to mimic your art style using one of these models will likely get a style that actually looks quite a bit different from what they wanted.

What Nightshade does is something a little bit different. Nightshade targets these base models, these larger models that companies are training. And, in training, what they do is they take millions and millions of images from online. And of course, there’s lots of issues right now in the legal system about the legality, licensing agreements, you know, no consent, etc. But Nightshade is designed to make this process more expensive by introducing basically like a little poison pill inside each piece of art so that when these models take in a lot of these images, it’ll start to get poisoned. This accumulates, and then the models get really confused about what a cat actually looks like, what a dog actually looks like. And when you ask it for a cow, maybe it’ll give you a 1960s Ford Bronco. And really, the goal is not to necessarily break these models. The goal is to basically make it so expensive to train on unlicensed data that you can just get from scraping the internet so that these companies actually start thinking about licensing content from artists to actually train the models.

Ryssdal: Right, which is, as you say, the subject of at least one, and probably several and many more, lawsuits to come. This does seem a little bit though like the black-hat hacker versus white-hat hacker thing in that the people who are writing these AI models will eventually take apart your tools and figure out how to get around them. And thus we have some kind of AI arms race-ish kind of thing.

Zhao: Sure, that may well be. And in reality, you know, most security systems are like this. It’s always a sort of ongoing evolution system. But that doesn’t mean that it doesn’t have impact. The point of security when it comes to defenses or attacks is to raise the price for the other side. So in this case, all we’re trying to do is to raise the price for unauthorized training on scraped data.

Ryssdal: Have you heard from your computer science peers who work for Stable Diffusion or Midjourney? And they’re like, “Ben, what are you doing, man? You’re killing us”?

Zhao: No, actually surprisingly, overwhelmingly, the feedback is great. Of course, some people at some of the companies are like, “Yeah, you know, seems, like, a little aggressive. This seems like something that maybe we can talk out, maybe we can, you know, let legislation take care of this.” But I think what this does is it tries to balance the playing field, because, if you think about it, creatives — whether it’s individual artists or actual companies who own IP [intellectual property] — there’s nothing they can do against AI model trainers right now. Nothing. The best that they could do is, like, sign up for an opt-out list somewhere and hope that the AI trainers are kind enough to respect it. Because there’s no way to enforce it. There’s no way to verify it. And that’s for the companies who actually care. , and the smaller companies, of course, will just do whatever they want and have absolutely no consequences.

Ryssdal: You know, when ChatGPT and all the rest first broke onto the scene, and those of us who are not in the field became aware of it, it seemed — I’m exaggerating here — but it seems a little bit like magic. And maybe this is an uninformed question, but is this hard computer science?

Zhao: Hard? I mean, it takes a few Ph.D.s to think about this idea. It’s reasonably interesting and nontrivial in the sense that, if it was easy, somebody would have done this a while ago. So yeah, I guess it’s hard. But you know, if research was easy, then it wouldn’t be interesting.

Ryssdal: Yeah. So I was gonna let you go, but something you just said piqued my interest. You said it’s nontrivial. As you see it, a guy in this field — deep in this field — what’s at stake here?

Zhao: Ah, boy, in terms of the grander scale, if I step back and I look at what’s happening with generative AI and human creators today, I think, “Boy, everything is at stake.” Because what we’re looking at is the tools that are being deployed without regulation, without ethical guardrails, without care for the actual damage we’re doing to human creators in these industries. And then to think, if you project that forward, it gets worse. These models are dependent on human creativity to fuel them, to help them train, to help them get better. They’re not going to get much better if there is no more training data. And so, even for AI people who are super thrilled about these models and the future that they bring, they should be aware of this, and they should be cognizant of the fact that you need to support human creatives in this future because otherwise, these models will just shrivel up and die.

There’s a lot happening in the world. Through it all, Marketplace is here for you.

You rely on Marketplace to break down the world’s events and tell you how it affects you in a fact-based, approachable way. We rely on your financial support to keep making that possible.

Your donation today powers the independent journalism that you rely on. For just $5/month, you can help sustain Marketplace so we can keep reporting on the things that matter to you.