Generative AI exploded into the public consciousness at the end of 2022 with the release of ChatGPT, but by that point it was already causing problems for artists and illustrators. They only gotten worse as the AI hype took off over the past year.

You’ve probably heard the story by now: AI companies indiscriminately took a ton of copyrighted material off the web without anyone’s permission, trained models on all that data, then acted like they weren’t to blame when people started using image generators like DALL-E and Midjourney to create works in the same style as artists whose work had been stolen to train them. But there’s another piece to that: it isn’t just an insult to those artists; it’s actively taking work away from them.

To learn more about the impact these AI tools have had on artists and how people in the profession are fighting back, I spoke to award-winning concept artist Karla Ortiz. She not only has an impressive resume that spans mediums and includes work with companies like Marvel, Ubisoft, and Wizards of the Coast, but she’s been at the forefront of the campaign against generative AI. Ortiz was one of three names on the first class action lawsuit launched against AI image generators back in January 2023 and her activism hasn’t stopped there.

Sign up for Disconnect

Tech critic Paris Marx provides the critical take on Silicon Valley you’ve been missing

No spam. Unsubscribe anytime.

This interview has been edited for length and clarity.

Generative AI and AI-generated media have been in the news a lot for the past year or so. When did you come across these tools and when did you start to realize they would become an important issue for illustrators and concept artists like yourself?

I became aware of generative AI around April 2020. I came across a website called Weird Wonderful AI and it was promoting this thing called Disco Diffusion and it had something called the Artist Studies. In those Artist Studies, I saw the names of so many of my peers, people that I knew personally, and one of the things that really blew my mind was that there were some similarities to their work. I thought at first it must be some kind of university thing and I wanted to know more about it, so I reached out to some of the actual artists that are on this list, and to my shock, they didn’t know what I was talking about.

This place had studied those artists without the artists even knowing about it. So we looked into who was making these and we found out they were also selling merchandise that suspiciously looked just like the studies they were doing — mugs, NFTs, you name it. The folks that were on these studies were like, “Hey, listen, we reached out to them.” We were like, “Hey, can you take their name down? They didn’t want to be a part of this.” But these folks straight up ghosted us. That was my first introduction to generative AI.

Fast forward to August and September of 2022, when Midjourney, DALL-E, and Stable Diffusion were really blowing up, and because the first interaction had left such a bad taste in my mouth, I was like, “Okay, let me research these guys and see what’s up.” What I found was disturbing. Basically these models had been trained on almost the entirety of my work, almost the entirety of the work of my peers, and almost every single artist that I knew. I spent various afternoons being like, “What about this artist? There they are in the dataset. What about that artist? There they are in the dataset.” To add insult to injury, these companies were letting users and in some cases encouraging users to use our full names and our reputations to make media that looked like ours to then immediately compete with us in our own markets.

The thing that a lot of people don’t know is that like artists like myself were already feeling the effects of this in our industry back in August 2022. Rumors started to pop up that people had lost jobs, and then rumors became anecdotal quotes where it’s like, “Yes, I actually lost this.” I knew of students who had lost their internships because companies decided to use generative AI. That took various forms in the entertainment industry, in music, in illustration. Even that same winter of 2022, the San Francisco Ballet did a whole marketing campaign for The Nutcracker — which is huge — and it was completely generated via Midjourney. But that’s someone’s job. That’s high profile. That could have gone to a photographer or an illustrator.

Can you tell us what goes into being a concept artist and what the response of your profession has been?

A concept artist essentially solves visual problems for a production or a client. It starts with a description from the books, and then eventually a description from the script. From there on, rather than give that to VFX or to costume and spend a lot of money to build these things to then find out they’re going in the completely wrong direction, you start with either a drawing or a painting. That’s literally it. That’s the job. So basically, I’m there to problem solve for a director or a production what something could look like. That could be for characters, clothing, creatures, or environments. All of these things require paintings. We’re often some of the first people in pre-production and, more often than not, we usually push the look of a full picture, sometimes even doing something called keyframes, which are actual moments in the film. We’re kind of like the unsung heroes for a lot of moviemaking because the work influences all other departments.

So that’s our role, but you can see how that role of providing imagery to solve a visual problem from text to image becomes immediately at risk from tools or models that explicitly do that. For those models to explicitly do that while utilizing the names of people who work in these industries is especially egregious.

I’m a board member of the Concept Art Association, which is this wonderful little association, and I brought the concerns to them. Immediately, the Concept Art Association wanted to do a town hall. I think it was like early September 2022. We didn’t know much about it, but I did get to bring in a machine learning expert, in this case Abhishek Gupta from the Montreal AI Ethics Institute, and I just had a conversation with him where I’m like, “Here’s all the information I gathered. Is this bad? Is this okay?” And he was horrified. He didn’t know how bad the training data was for these models, how exploitative they were, and the actions the companies were allowing the users to engage in. That’s been the case with many other machine learning experts that we’ve spoken to. That gave us the motivation to be like, “It’s not us being upset without knowing the details. Now we know the details. Now we know how this works. This is bullshit.”

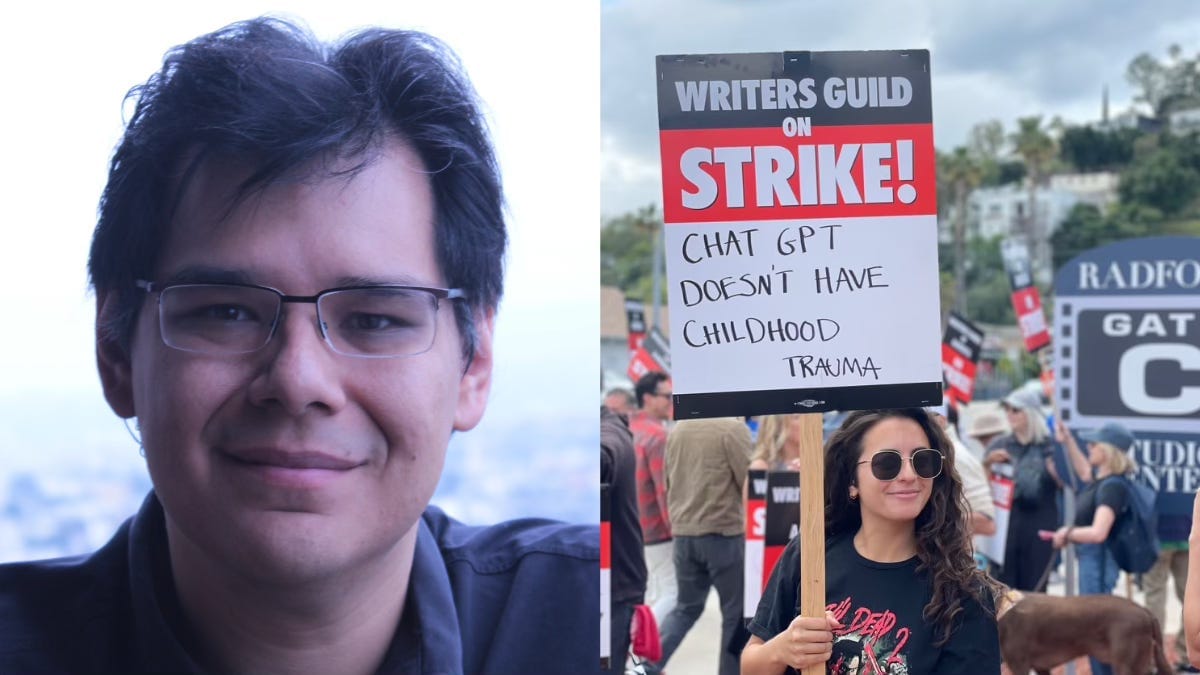

“The act of writing is not typing”

An interview with John Lopez about AI and the writers’ strike

From there, my path branched out in many different ways. On one end, I helped the Concept Art Association elevate their efforts. They focus on legislation, so they’ve been hitting DC, they’ve been hitting government officials. The other branch that I took was the litigation side. I became one of the first three plaintiffs on a class action lawsuit. At the time, it was against DeviantArt, Midjourney, and Stable Diffusion. Runway ML was added to it as well. While also still trying to make a living, this thing has become my part-time job.

In October, I went to an artist convention called Lightbox, and one of the things that got to me was veterans in this industry basically said what I knew to be true, because I myself had gone through a project like this, that pitch work is dead. Pitch work is basically when a director, writer, producer, or any combination of those get together with an artist and say, “We want to pitch to studios and we need imagery.” All of that has now been given to generative AI. I was part of a pitch work production. I was working closely with the director. It was going awesome. This was during the height of the of the strikes, so it would have been welcome work. But as soon as I gave my quote — gone. They totally disappeared, and their entire pitch deck was already full of generative AI from Midjourney. So many people are saying, “Who cares if I can’t copyright this? Who cares if this is exploitative? Who cares if this is replacing the people I’ve worked with for decades? I’m still going to use it.” It’s pretty bad.

Can you talk a bit more about the risk that generative AI poses to artists like yourself and how you’re already seeing it change the work of illustrators?

For starters, people just aren’t working as much as they were before. I especially worry for people who are starting in their careers. The bigger companies, the ones who have access to big legal teams, they’re not touching this because they know this is a fucking mess. But that doesn’t mean that their outsourcers are following those guidelines or their marketing teams are following those guidelines. But they’re not touching it. It’s a lot of the middle or sometimes even smaller companies that don’t have access to those legal teams that are implementing generative AI right away and that means a lot of artists have been laid off or have had their duties slashed in half.

For example, I’m a contractor. I’ve been part of three different productions that have utilized generative AI at some capacity — and they’re big, so there goes my theory. But the problem is that I charge by the hour and whenever generative AI was utilized, it was utilized to slash those hours. They’ll say, “Here’s what you did and here’s what this model did,” side by side to the director. I can show maybe six or seven images tops, while the thing showed a hundred images. Regardless of whether it was chosen or not, that took time away from me, from the art team, and from all the other contractors to take the time to develop new visuals. That already diminished our roles and our earnings.

Then you have, again, a lot of students that are transitioning from student to professional and they rely on a lot of the smaller jobs as well. But those are the ones that are most likely using generative AI right now. Recently I was looking at like Global Game Jam, which is a world-renowned game jam that is usually a great start for artists. They’re being sponsored by Leonardo AI, which is entirely fueled by Stable Diffusion. They’re saying to generate assets on it and that completely cuts the ability for new artists to get their foot in the door.

There’s a bit of a creative working ecosystem where there are natural cycles to someone getting their start to then having enough experience to gain a higher level in their career and make a living out of it. All of those doors are now starting to close because of generative AI. With the works that generative AI makes, the metric isn’t whether something is artistic or has quality, because if that were the case then people would win. The metric is the market itself. When you have a team trying to slash costs who say, “I could hire this artist full time, that’s a whole year’s worth of salary, or I could just pay a little subscription fee,” they’re going to go for the model.

Veterans who should be respected for the incredible contributions to our industry have been approached by high-profile production houses being like, “Can you paint over this Midjourney image? Oh, and we’ll pay you half.” That’s happening right now. At least in film, there’s at least some good pay a person can make a living off, and that’s now being lowered. And it’s going so much faster than any of us ever imagined. There’s a lot of angst and depression, even among actual professionals who are like, “I’ve given my whole life to this. It’s a lifetime of work.” And then for some company to say, “That lifetime of work, that dedication, it’s now mine. We’re gonna compete against you, we’re gonna make insane amounts of money off your work, and you don’t have to have a say.” That’s fucking a lot of people up. It’s a really tough time.

I can only imagine. You talked about how you’ve been involved in a lot of organizing around generative AI and even litigation against some of these companies. Can you tell me a bit more about the work you’ve been doing and the impacts that you’ve seen from it?

I’ve come to realize my coping mechanism is action. I’ve been so lucky that so many wonderful people have also been working extremely hard and have acted. There’s been a sense of solidarity and organizing from artists in a way that I haven’t seen in a really long time. Artists in particular, we’re uniquely at risk in ways that other creative endeavors aren’t. A lot of us are contractors, we don’t have a clear union. For concept artists in particular, our unions are split in two. We’re between the Art Directors Guild and the Costume Designers Guild. Oftentimes we’re in the same union as our bosses, and some of those bosses really want to use generative AI. The unions are trying to work that out, and I hope they do. But the majority of us are contract and we don’t have the same protections that the recording industry might have or SAG-AFTRA might have or the Writers Guild might have that.

Because of that lack of protections, we’ve banded together and advocated for ourselves in a way that has been very inspiring and very hopeful. Last year, the Concept Art Association said, “We need to be in DC because we have to have a voice.” And we’re like, “Let’s just do the same thing that other companies do. Let’s hire a lobbyist. Let’s hire someone who has the connections.” So we did a fundraiser and 5,000 people from all around the globe gave them enough to be able to fund a full year of lobbying; a full year of action, a full year of events. Because of that I got to go to the Senate and I got to testify. I got to be in the same room as the Stable Diffusions and the Adobes. I was definitely the poorest person there because next to me was the top lawyer from the recording industry, the policy person for Stability, and the top lawyer for Adobe. But it was impactful. I got to tell the senators exactly what had happened and exactly how we were affected. Between that and a lot of different conversations behind the scenes with congressmen, staffers, regulatory agencies, and so on, that little organization has made a huge splash.

Generative AI closes off a better future

Ursula Le Guin said we must be able to imagine freedom. AI traps us in the past.

There’s a continued push to form alliances between artists groups and artists coalitions. There’s the Human Artistry Campaign, a coalition of 140 different artist groups. Trying to tell everybody it’s not enough to just get your creative sector safe, we’re in this together, that’s been interesting. I’m tired, I really just want to paint all day. But again, it’s existential. I’m very happy to know I’m not the only one. I hope that’s enough to make some solid changes.

In terms of those changes, what do you think is going to be necessary to rein in these companies and these tools? Do you think regulation is necessary? Do you think litigation will deliver that? And is there anything the public can do to help move that cause forward?

It’s not a “one solution,” it’s an “all solutions” type of situation. We need legislation. We need regulation. We need litigation. We need education. We need public relations. We need the public to be like, “No, I will not support your product if it contains generative AI because it’s an exploitative technology that is meant to replace the same people who’ve worked on the things that we’ve loved for so long.” Eventually it’s coming for anyone whose work will be digitized.

In terms of litigation, we need the courts to look at this and say, “This kind of exploitative practice of indiscriminately grabbing whatever data we can see on the internet and say, ‘Oh, it’s publicly available, so I could profit from it’ — even if that data is copyrighted, biometric, private. That’s not okay.” Companies should not be able to get away with this, and I feel like the court should be unanimous in that decision and say, “It’s not okay to train models off the work of people who do not want to be part of it and who were never even asked.” We also need relief and payment from all these companies who made all this profit on the back of artists and the back of people that didn’t even want to be a part of it. Those companies need to pay these people.

In terms of regulation, we need so much action and we need it yesterday. There’s a specific and wonderful term that sounds metal as hell called “algorithmic disgorgement.” It’s an act the FTC has engaged in before — basically, the destruction of any algorithm, dataset, or model that contains ill-gotten or illicit data. These definitely fit that bill without a shadow of a doubt. Specifically, we recently found out that even child abuse material has been found in these datasets. So we need algorithmic disgorgement. We need destruction of these models right off the bat because once a model is trained on data, it can’t forget.

After that, we need regulations or rules to stipulate that models should only be trained on the public domain, as in like from government archives, stuff that’s available for everybody to use, with any expansion upon that to be done with the explicit consent, credit, and compensation of whomever owns that data. I’m not talking about like, “in order for you to get a job, you need to say you will give us your data,” because that’s gross. And I’m not talking about, “we’ll give you 0.005 cents if you give us your image,” because that’s also equally gross. I’m talking about serious wages here.

We also need immediate transparency of all models, because we can’t have an OpenAI situation where they’ve gotten away with not telling the public what their models are trained on. There’s definitely copyrighted data in there. I don’t want to see a situation where just because the company holds copyright in the form of work for hire, where we give away all of our rights to a company in order to work with that company and they can do what they want with it. For example, I worked with Disney for eight years or so. Disney owns so much of my work and there’s nothing stopping them from generating a Karla Ortiz model internally and never having to hire me again. That’s a possibility that can happen and we need worker protections against that.

So we need a lot. In terms of the public again, don’t engage with theft. If you see something that has generative AI, raise a ruckus, complaint about it. It does work. A lot of companies have steered away from it because of the backlash they’ve seen. It sounds silly, but it is true: vote with your money on this stuff. Don’t reward a product that used generative AI. Call them out.

I think all those points are so important. And I love the focus on algorithmic discouragement instead of just regulating it. Any final thoughts?

One more thing: Send opt-out procedures into a deep, dark hole. Fuck that. That’s a push a lot of the AI companies have been doing for quite a while because it’s easy. It shifts the burden of responsibility onto people in a really irresponsible, but also unfeasible way.

There are hundreds of visual models. Does that mean I have to opt out of every single one of those models? What about every time they update? The method of opting out is incredibly inefficient. For example, OpenAI not too long ago was like, “Yeah, we’ll opt you out, but you have to show how we’ve used your work.” How do you do that when they’re not even open and transparent about the work that they’ve used? You can’t. That also places undue burden on people who might not know the language, who might not know the technology, who might not even be aware that this is happening because they’re just not online. It’s completely reprehensible.

They know that if they can push opt out only, they can still have access to all that ill-gotten data. And that’s the data that powers them. That’s the secret sauce. But if you do algorithmic disgorgement and implement opt in, then they have a problem because hardly anybody wants to give away their data. Why? Because those models are going to compete with them in the future. Why would you do that? Opt in only.

Sign up for Disconnect

Tech critic Paris Marx provides the critical take on Silicon Valley you’ve been missing

No spam. Unsubscribe anytime.